About

YVIC Research Lab examines how the geometry of pretrained representations constrains downstream control and adaptation in language models. The work focuses on preserving semantic structure under aggressive compression, understanding the limits of prompt-based control at the representation level, and explaining why certain regions of inherited manifolds resist reliable generalization even under strong supervision.

Research interests

- Semantic manifold preservation in capacity-constrained multilingual models

- Representation-level analysis of prompt and prefix control

- Geometric origins of generalization failures in post-pretraining adaptation

- On-device deployment under strict efficiency and privacy constraints

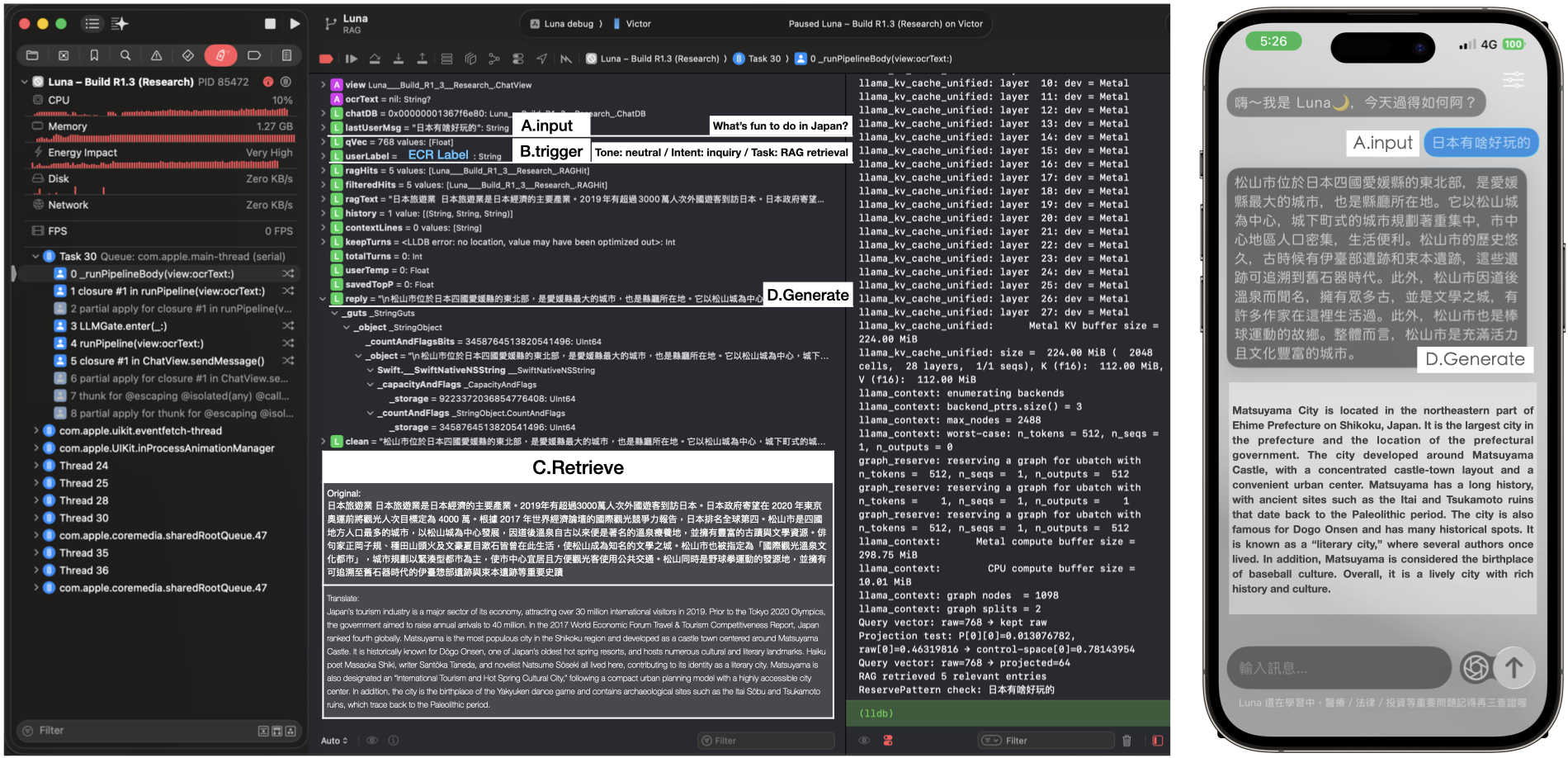

Luna (on-device system)

Luna is a fully offline, on-device research prototype (0.6B quantized) used to validate manifold-preserving compression techniques in real deployment conditions, including local RAG and Metal-accelerated inference on consumer hardware.

Luna is presented as a research artifact rather than a commercial product.